IEEE Conference on Computer Vision and Pattern Recognition 2017

Light Field Reconstruction Using Deep Convolutional Network on EPI

Gaochang Wu 1,2, Mandan Zhao 2, Liangyong Wang 1, Qionghai Dai 2 Tianyou Chai 1, Yebin Liu 2

1. State Key Laboratory of Synthetical Automation for Process Industries, Northeastern University 2. Department of Automation, Tsinghua University

|

|

|---|

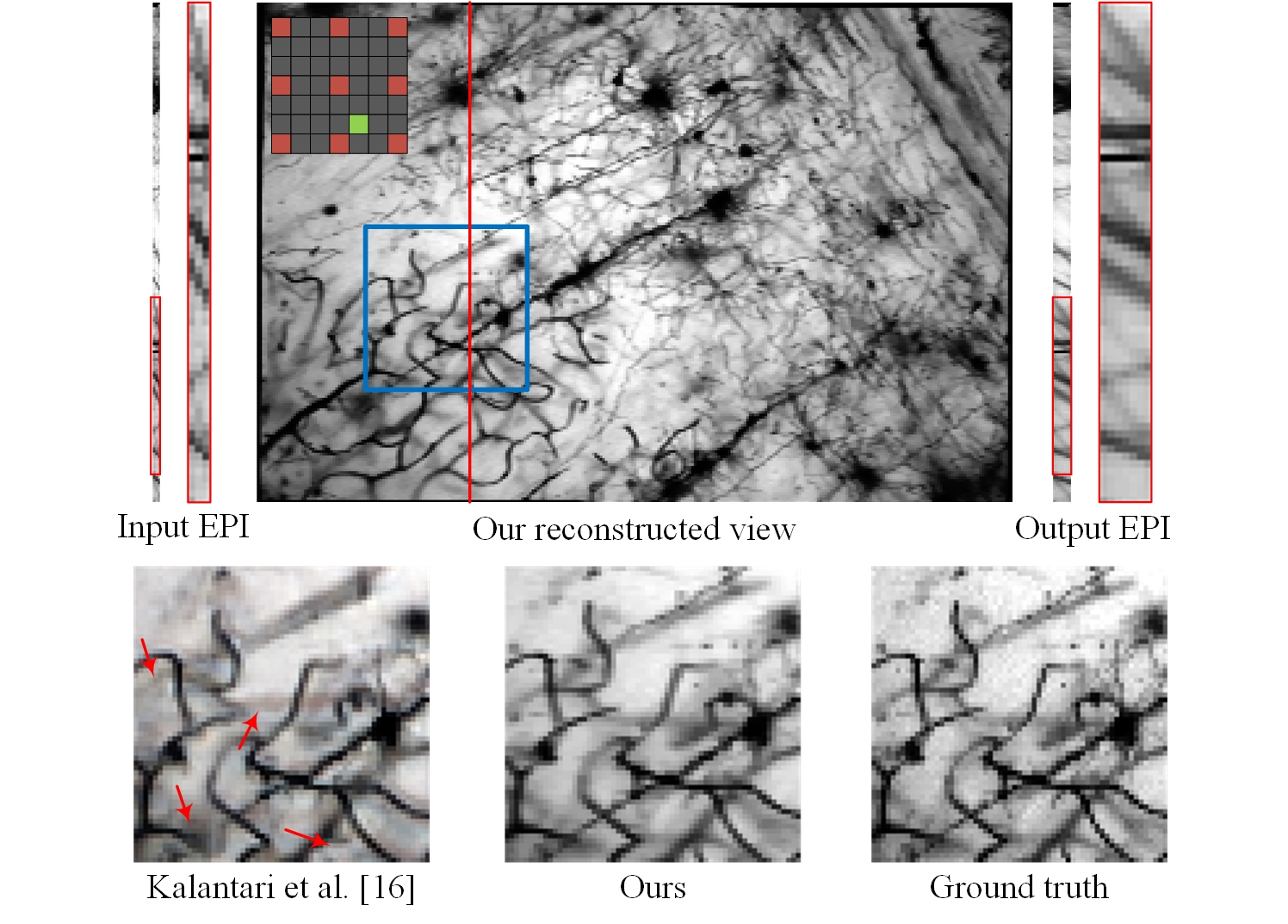

Figure.1: Comparison of light field reconstruction results on Stanford microscope light field data Neurons 20× [21] using 3×3 input views. The proposed learning-based EPI reconstruction produces better results in this challenging case.

Abstract

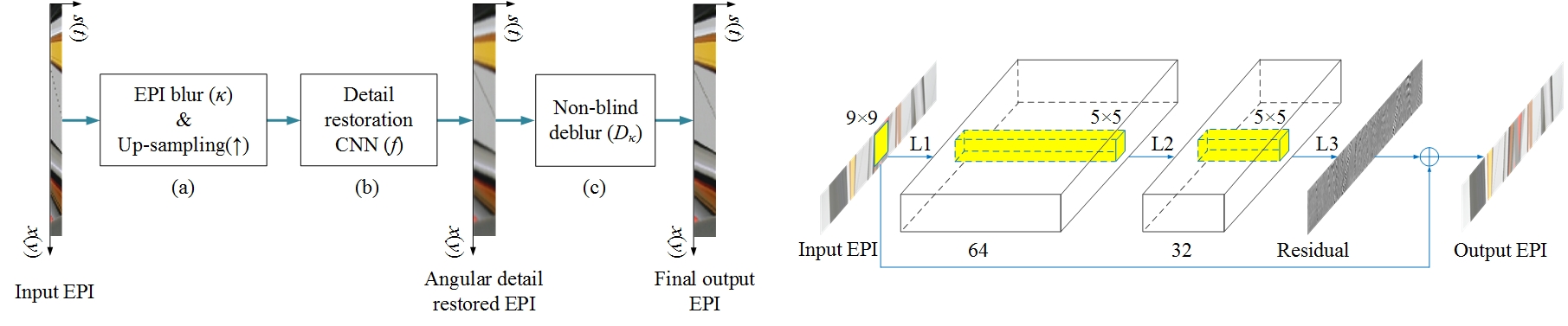

In this paper, we take advantage of the clear texture structure of the epipolar plane image (EPI) in the light field data and model the problem of light field reconstruction from a sparse set of views as a CNN-based angular detail restoration on EPI. We indicate that one of the main challenges in sparsely sampled light field reconstruction is the information asymmetry between the spatial and angular domain, where the detail portion in the angular domain is damaged by undersampling. To balance the spatial and angular information, the spatial high frequency components of an EPI is removed using EPI blur, before feeding to the network. Finally, a non-blind deblur operation is used to recover the spatial detail suppressed by the EPI blur. We evaluate our approach on several datasets including synthetic scenes, real-world scenes and challenging microscope light field data. We demonstrate the high performance and robustness of the proposed framework compared with the state-of-the-arts algorithms. We also show a further application for depth enhancement by using the reconstructed light field.

|

|---|

Figure.2: Left: The proposed learning-based framework for light field reconstruction on EPI. Right: The proposed detail restoration network. The network is composed of three layers, where the first and the second are followed by a rectified linear unit (ReLU). The final output of the network is the sum of the predicted residual (detail) and the input.

Results

|

|---|

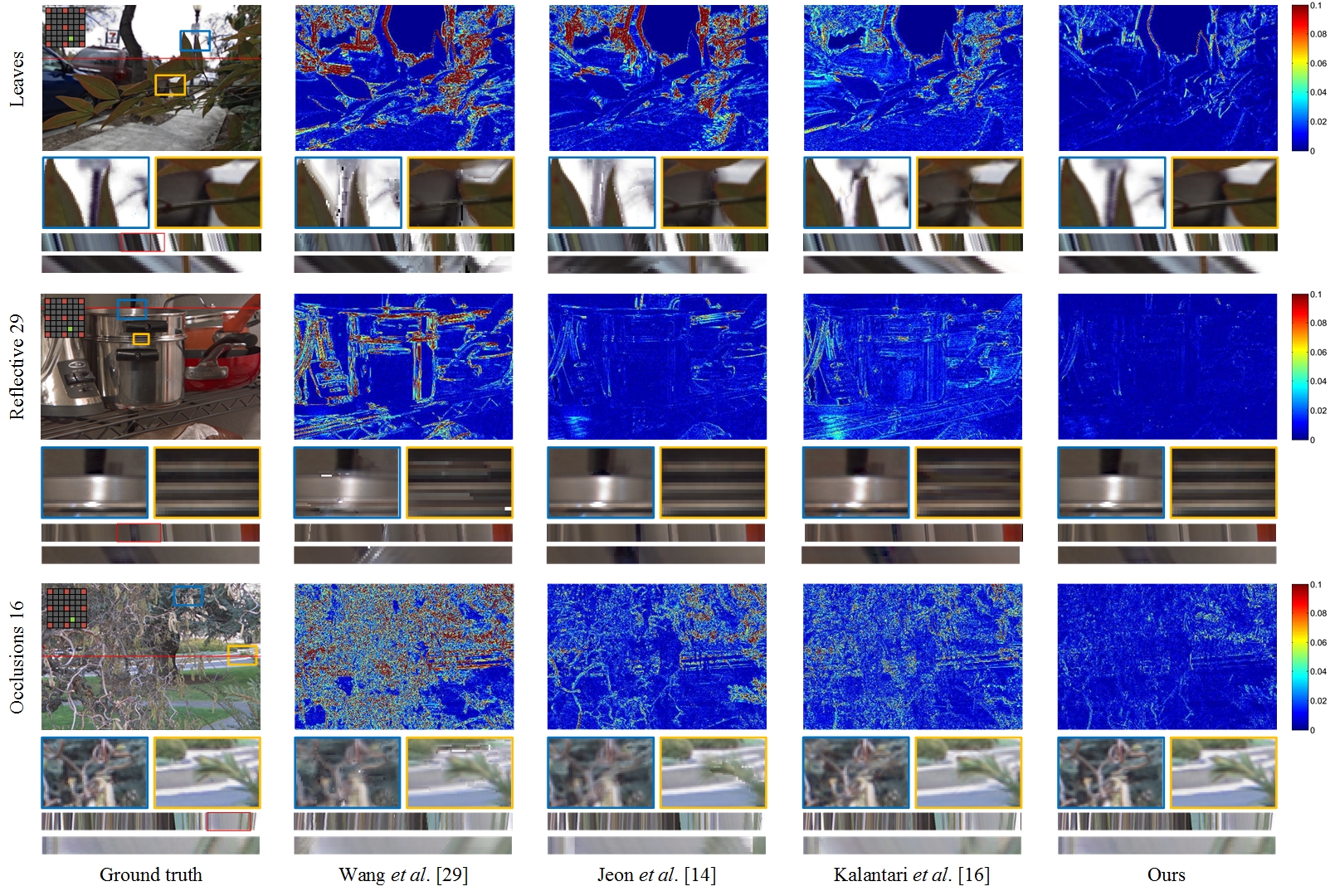

Figure.3: Comparison of the proposed approach against other methods on the real-world scenes. The results show the ground truth images, error maps of the synthetic results in the Y channel, close-up versions of the image portions in the blue and yellow boxes, and the EPIs located at the red line shown in the ground truth view. The EPIs are upsampled to an appropriate scale in the angular domain for better viewing. The lowest image in each block shows a close-up of the portion of the EPIs in the red box.

|

|---|

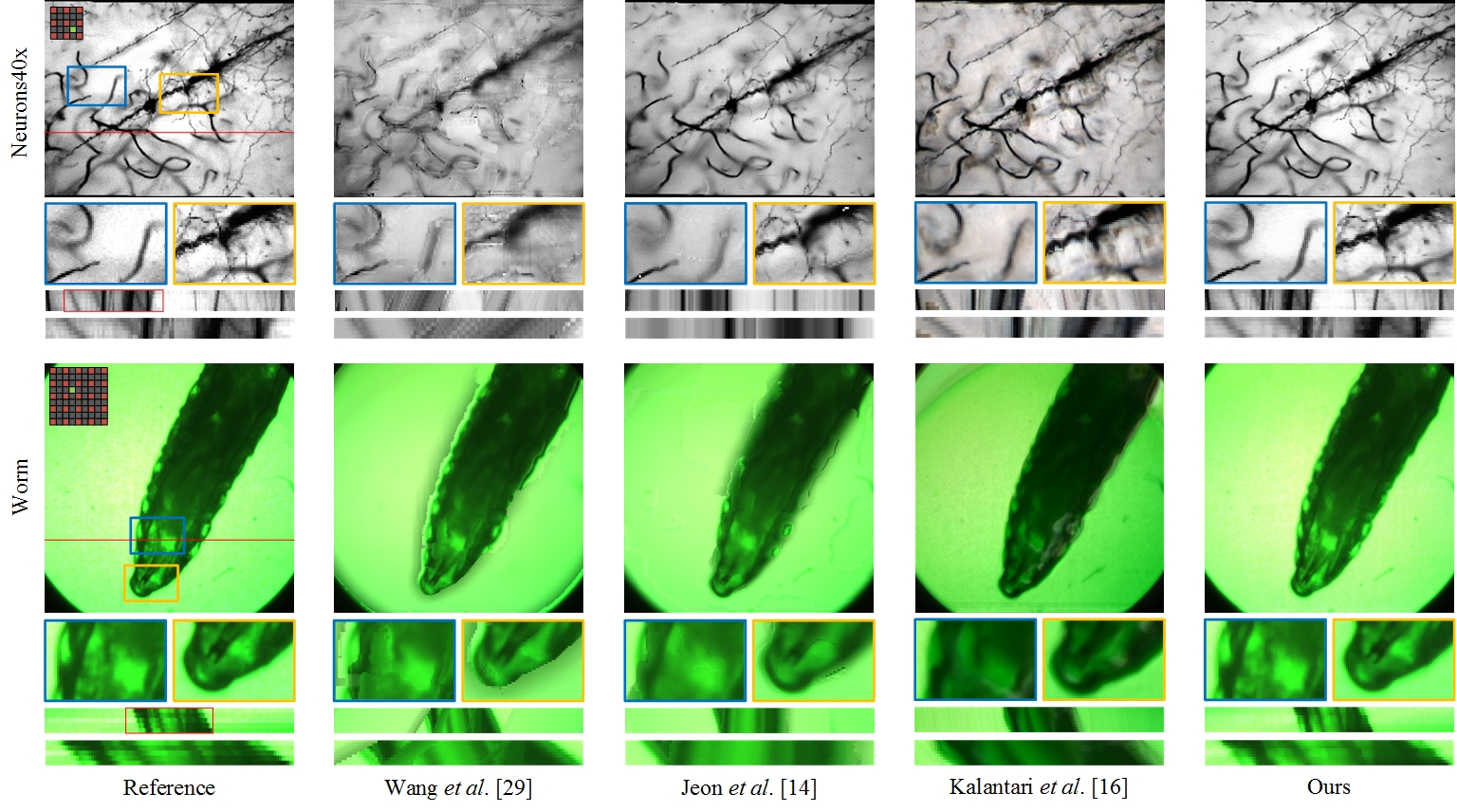

Figure.4: Comparison of the proposed approach against other methods on the microscope light field datasets. The results show the ground truth or reference images, synthetic results, close-up versions in the blue and yellow boxes, and the EPIs located at the red line shown in the ground truth view.

Video

Paper download : EPICNN.pdf Supplementary download : EPICNN_Supplementary.pdf

Source Code : version 1 version 2

Citation:

Gaochang Wu, Mandan Zhao, Liangyong Wang, Qionghai Dai, Tianyou Chai, and Yebin Liu, Light Field Reconstruction Using Deep Convolutional Network on EPI, IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017

@inproceedings{EPICNN17,

author = {Gaochang Wu and Mandan Zhao and Liangyong Wang and Qionghai Dai and Tianyou Chai and Yebin Liu},

title = {Light Field Reconstruction Using Deep Convolutional Network on EPI},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017},

year = {2017},

}