SIGGRAPH 2011

Video-based Characters–Creating New Human Performances from a Multi-view Video Database

Feng Xu, Yebin Liu, Carsten Stoll, James Tompkin, Gaurav Bharaj, Qionghai Dai, Hans-Peter Seidel, Jan Kautz, Christian Theobalt

Tsinghua University University College London MPI Informatik

Abstract

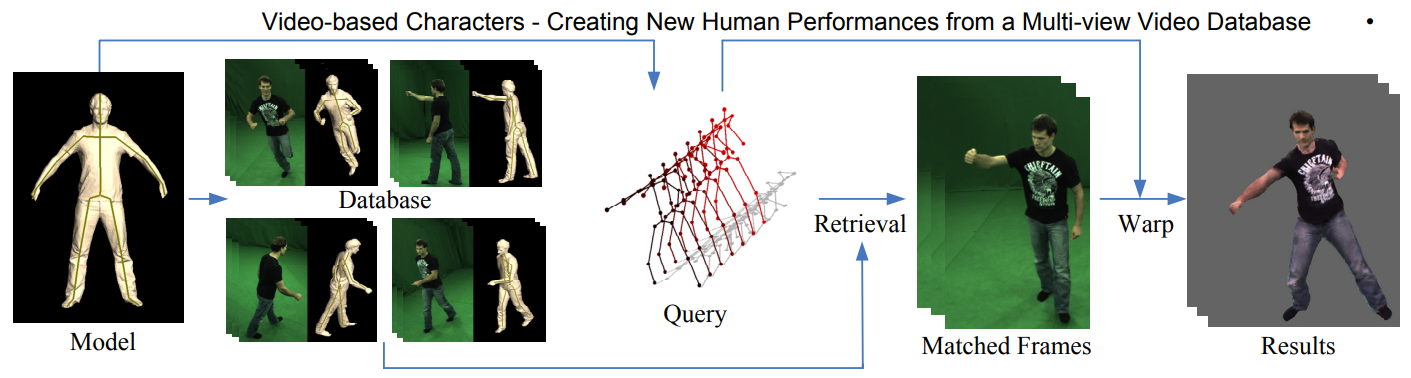

We present a method to synthesize plausible video sequences of humans according to user-defined body motions and viewpoints. We first capture a small database of multi-view video sequences of an actor performing various basic motions. This database needs to be captured only once and serves as the input to our synthesis algorithm. We then apply a marker-less model-based performance capture approach to the entire database to obtain pose and geometry of the actor in each database frame. To create novel video sequences of the actor from the database, a user animates a 3D human skeleton with novel motion and viewpoints. Our technique then synthesizes a realistic video sequence of the actor performing the specified motion based only on the initial database. The first key component of our approach is a new efficient retrieval strategy to find appropriate spatio-temporally coherent database frames from which to synthesize target video frames. The second key component is a warping-based texture synthesis approach that uses the retrieved most-similar database frames to synthesize spatio-temporally coherent target video frames. For instance, this enables us to easily create video sequences of actors performing dangerous stunts without them being placed in harm’s way. We show through a variety of result videos and a user study that we can synthesize realistic videos of people, even if the target motions and camera views are different from the database content.

[paper] [Slide]

Fig 1. An animation of an actor created with our method from a multi-view video database. The motion was designed by an animator and the camera was tracked from the background with a commercial camera tracker. In the composited scene of animation and background, the synthesized character and her spatio-temporal appearance look close to lifelike.

Fig 2. Overview. The system maintains a database of multi-view video sequences of an actor performing simple motions. Skeleton motion and surface geometry were captured for each sequence using marker-less performance capture. The user is given a skeleton and surface mesh model of an actor. By specifying skeletal motion and camera settings, he can create a query animation. Our algorithm synthesizes a photo-realistic video of the actor performing the user-designed animation by selecting appropriate poses and their respective images from the database and warping these images to the target view.

Citation

Xu, Feng, Yebin Liu, Carsten Stoll, James Tompkin, Gaurav Bharaj, Qionghai Dai, Hans-Peter Seidel, Jan Kautz, and Christian Theobalt. "Video-based characters: creating new human performances from a multi-view video database." In ACM SIGGRAPH 2011 papers, pp. 1-10. 2011.

@incollection{xu2011video,

title={Video-based characters: creating new human performances from a multi-view video database},

author={Xu, Feng and Liu, Yebin and Stoll, Carsten and Tompkin, James and Bharaj, Gaurav and Dai, Qionghai and Seidel, Hans-Peter and Kautz, Jan and Theobalt, Christian},

booktitle={ACM SIGGRAPH 2011 papers},

pages={1--10},

year={2011}

}