IEEE ICCV 2021

LocalTrans: A Multiscale Local Transformer Network for Cross-Resolution Homography Estimation

Ruizhi Shao1,4*, Gaochang Wu2*, Yuemei Zhou1, Ying Fu3, Lu Fang1, Yebin Liu1

1Tsinghua University 2Northeastern University 3Beijing Institute of Technology 4Zhuohe Technology

Fig 1. We present a multiscale local transformer network, dubbed LocalTrans, for homography estimation. The proposed LocalTrans network achieves accurate homography estimation on challenging real-captured cross-resolution cases under resolution gap up to 10x. By warping each of the local high-resolution image on a global low-resolution image using the estimated homography matrix, we achieve artifact-free stitching on this challenging case, substantially outperforms feature-based homography estimation.

Abstract

Cross-resolution image alignment is a key problem in multiscale gigapixel photography, which requires to estimate homography matrix using images with large resolution gap. Existing deep homography methods concatenate the input images or features, neglecting the explicit formulation of correspondences between them, which leads to degraded accuracy in cross-resolution challenges. In this paper, we consider the cross-resolution homography estimation as a multimodal problem, and propose a local transformer network embedded within a multiscale structure to explicitly learn correspondences between the multimodal inputs, namely, input images with different resolutions. The proposed local transformer adopts a local attention map specifically for each position in the feature. By combining the local transformer with the multiscale structure, the network is able to capture long-short range correspondences efficiently and accurately. Experiments on both the MS-COCO dataset and the real-captured cross-resolution dataset show that the proposed network outperforms existing state-of-the-art feature-based and deep-learning-based homography estimation methods, and is able to accurately align images under 10x resolution gap.

[arXiv] [Code(comming soon)]

Overview

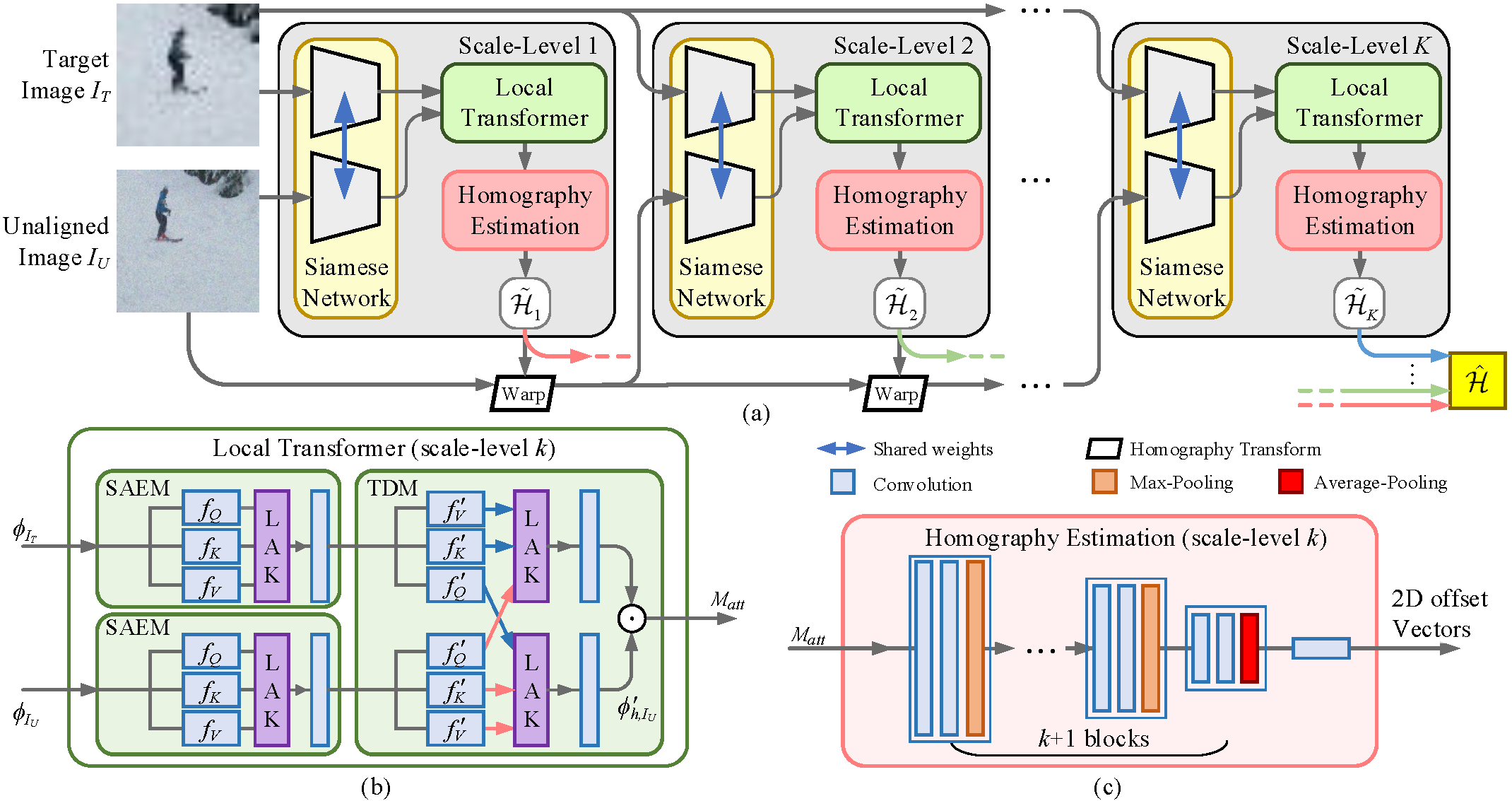

Fig 2. Architecture of the proposed LocalTrans network for homography estimation. (a) Overall structure of the LocalTrans; (b) Architecture of the local transformer that captures correspondences in different scales via a local self-attention encoder module (SAEM) and a local transformer decoder module (TDM); (c) Architecture of the homography estimation module that adopts local attention maps and high-level feature as input to estimate homography matrices from coarse-to-fine.

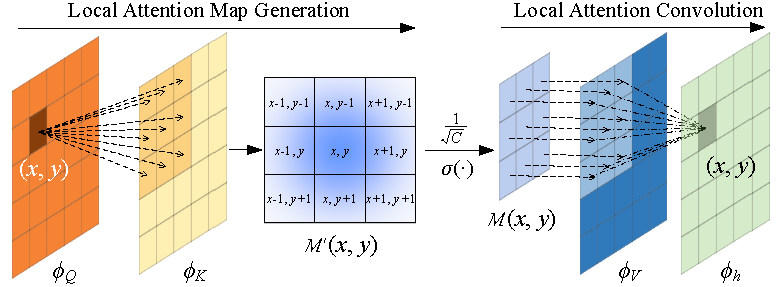

Fig 3. The process of Local Attention Kernel (LAK).

Results

Fig 4. Visual evaluation on the multiscale gigapixel dataset (top, 6x) and the cross-resolution stereo dataset (bottom, 10x). We mix the GB channels of the aligned image and the R channel of the target image. The misaligned pixels appear as red or green ghosts.

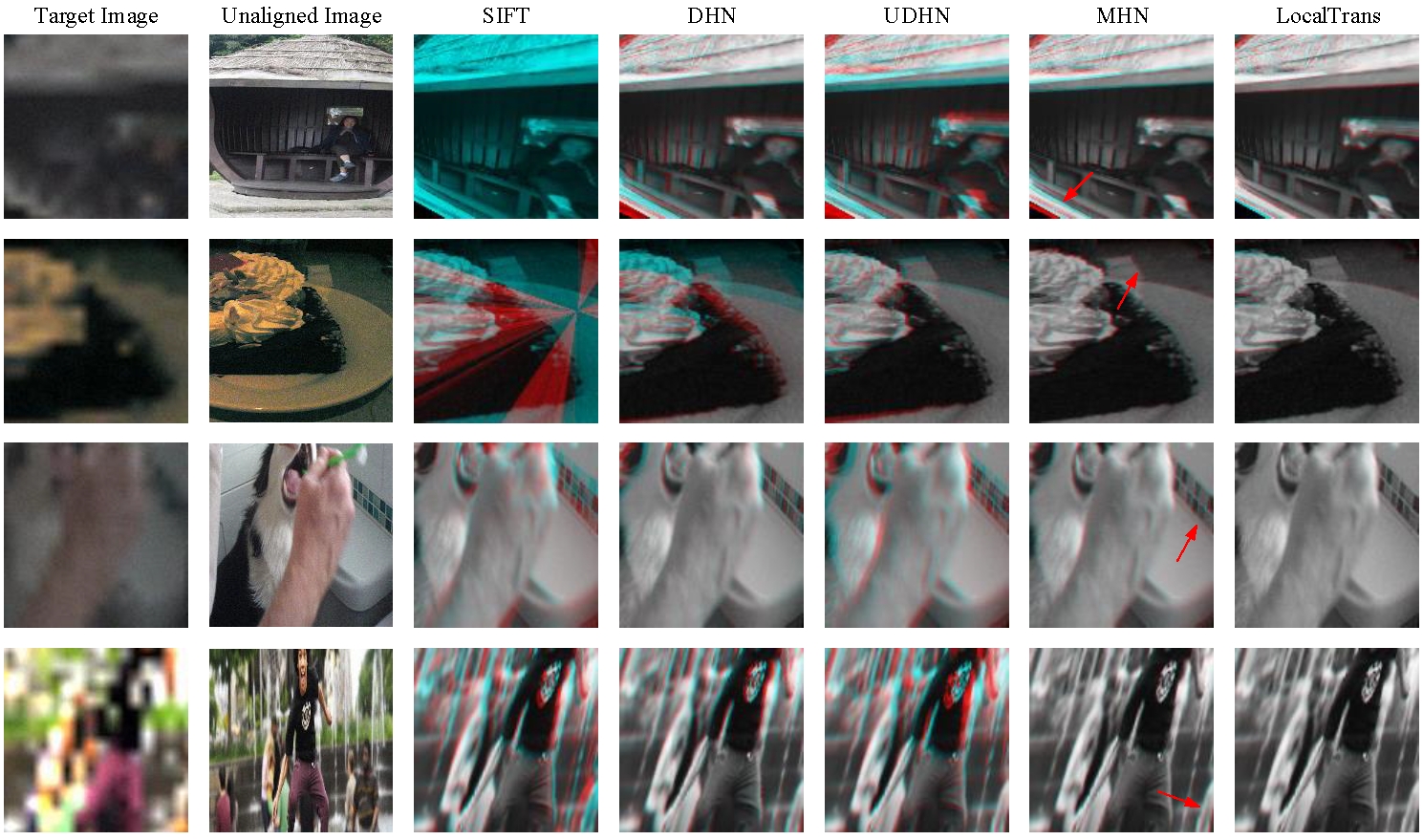

Fig 5. Visual evaluation on synthesized 8x cross-resolution data from MS-COCO.

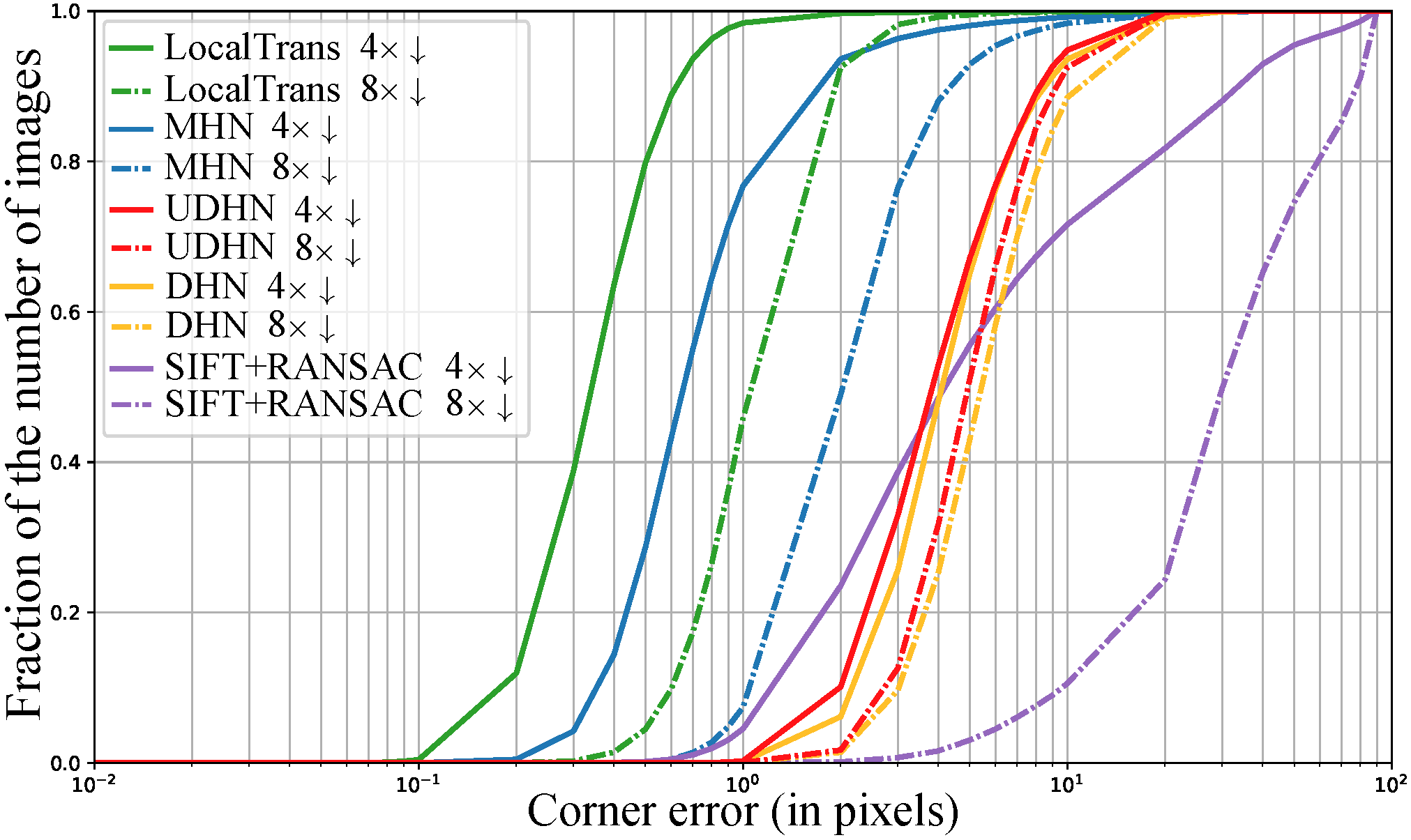

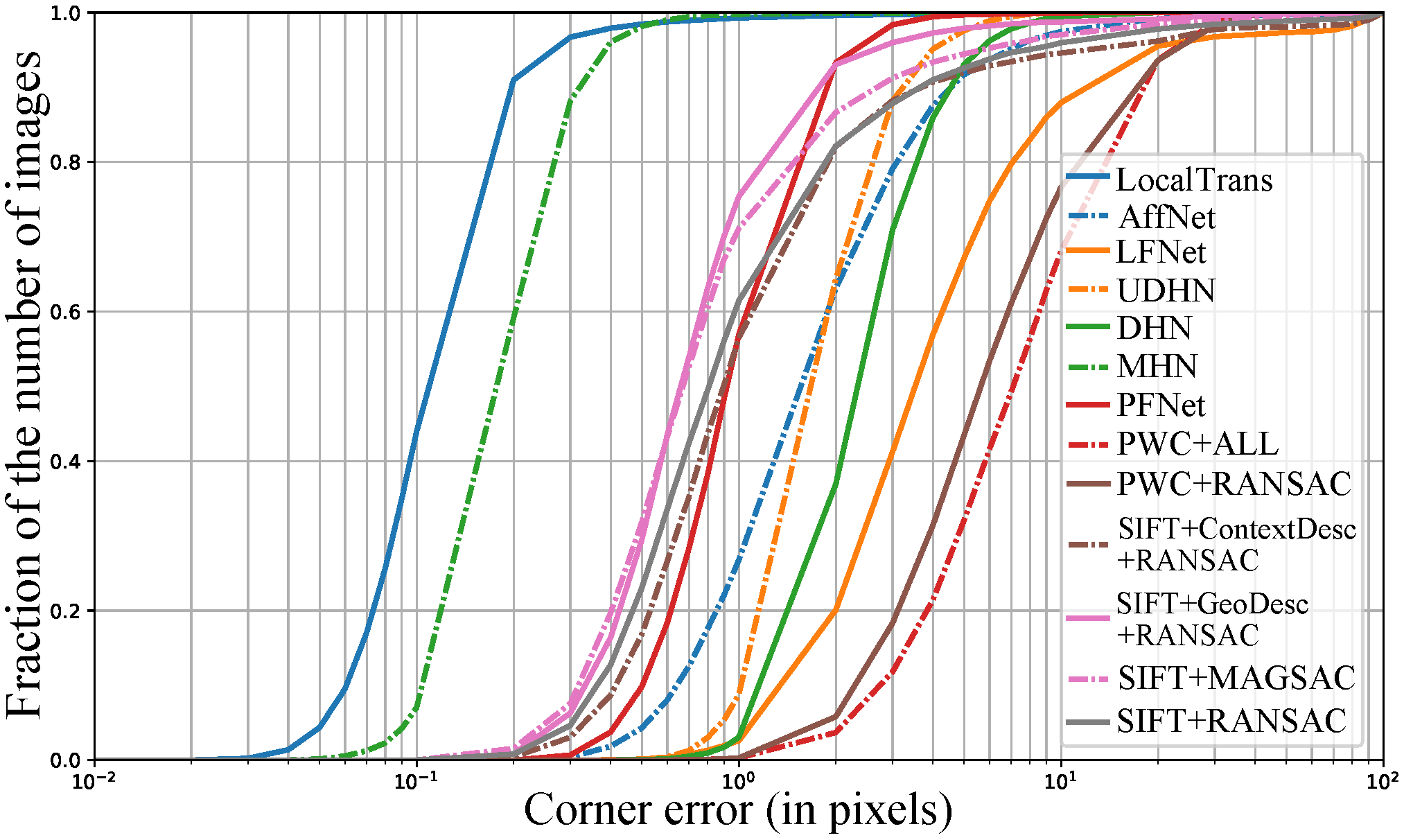

Fig 6. Quantitative Evaluations on the MS-COCO dataset in cross-resolution setting (left) and common setting (right).

Technical Paper

Demo Video

Citation

Ruizhi Shao, Gaochang Wu, Yuemei Zhou, Ying Fu, Lu Fang and Yebin Liu. "LocalTrans: A Multiscale Local Transformer Network for Cross-Resolution Homography Estimation". IEEE ICCV 2021

@inproceedings{shao2021localtrans,

title={LocalTrans: A Multiscale Local Transformer Network for Cross-Resolution Homography Estimation},

author={Shao, Ruizhi and Wu, Gaochang and Zhou, Yuemei and Fu, Ying and Fang, Lu and Liu, Yebin},

booktitle={IEEE Conference on Computer Vision (ICCV 2021)},

year={2021},

}