CVPR 2021 (Oral)

Function4D: Real-time Human Volumetric Capture

from Very Sparse Consumer RGBD Sensors

Tao Yu1, Zerong Zheng1, Kaiwen Guo2, Pengpeng Liu3, Qionghai Dai1, Yebin Liu1

1Tsinghua University, 2Google, 3Institute of Automation, Chinese Academy of Sciences

[THuman2.0 Dataset]

Abstract

Human volumetric capture is a long-standing topic in computer vision and computer graphics. Although high-quality results can be achieved using sophisticated off-line systems, real-time human volumetric capture of complex scenarios, especially using light-weight setups, remains challenging. In this paper, we propose a human volumetric capture method that combines temporal volumetric fusion and deep implicit functions. To achieve high-quality and temporal-continuous reconstruction, we propose dynamic sliding fusion to fuse neighboring depth observations together with topology consistency. Moreover, for detailed and complete surface generation, we propose detail-preserving deep implicit functions for RGBD input which can not only preserve the geometric details on the depth inputs but also generate more plausible texturing results. Results and experiments show that our method outperforms existing methods in terms of view sparsity, generalization capacity, reconstruction quality, and run-time efficiency.

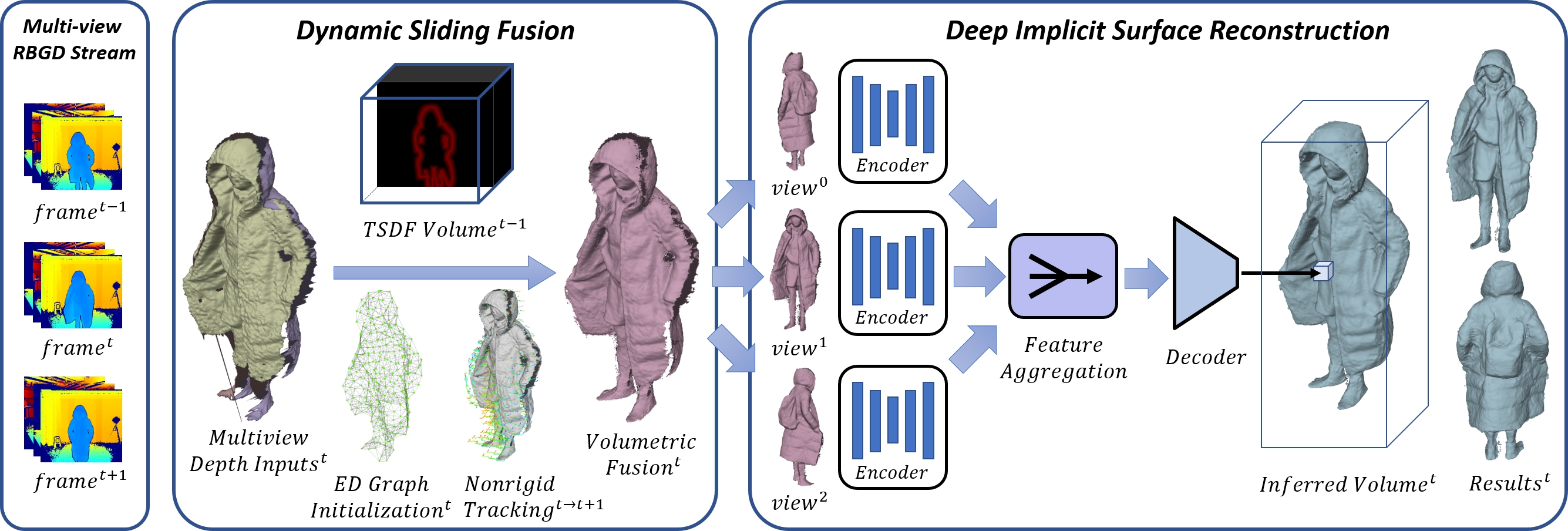

Fig 1. The proposed volumetric capture pipeline mainly contains 2 steps: Dynamic Sliding Fusion and Deep Implicit Surface Reconstruction. Given a group of synchronized multi-view RGBD inputs, we first perform dynamic sliding fusion by fusing its neighboring frames to generate noise-eliminated and temporal-continuous fusion results. After that, we re-render multi-view RGBD images using the sliding fusion results in the original viewpoints. Finally, in the deep implicit surface reconstruction step, we propose detail-preserving implicit functions (which consists of multi-view image encoders, a feature aggregation module, and an SDF/RGB decoder) for generating detailed and complete reconstruction results.

Results

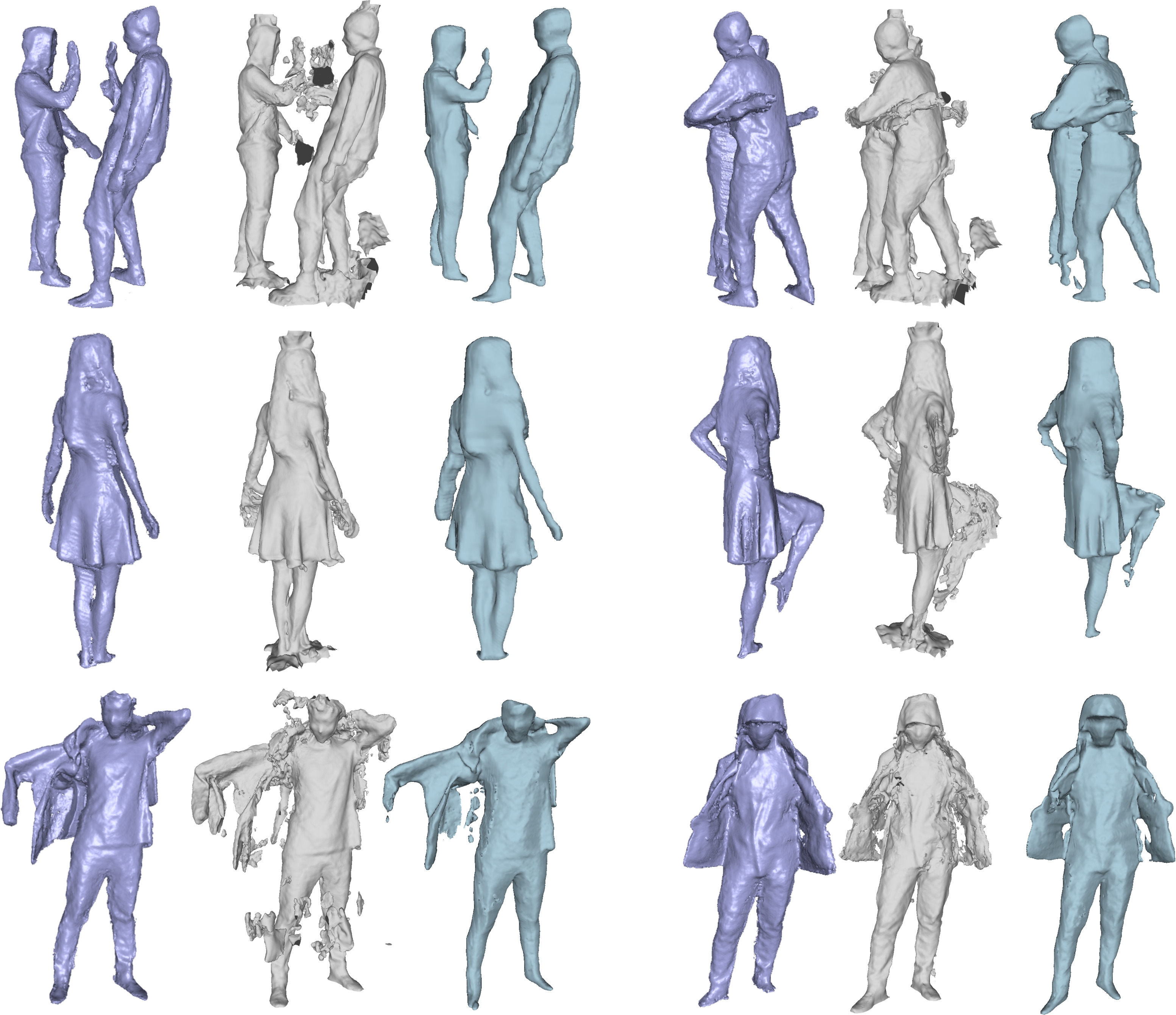

Fig 2. Volumetric capture results of various challenging scenarios using Function4D.

Fig 3. Temporal reconstruction results of a fast dancing girl. Our system can generate temporally-continuous and high-quality reconstruction results under challenging deformations (of the skirt) and severe topological changes (of the hair).

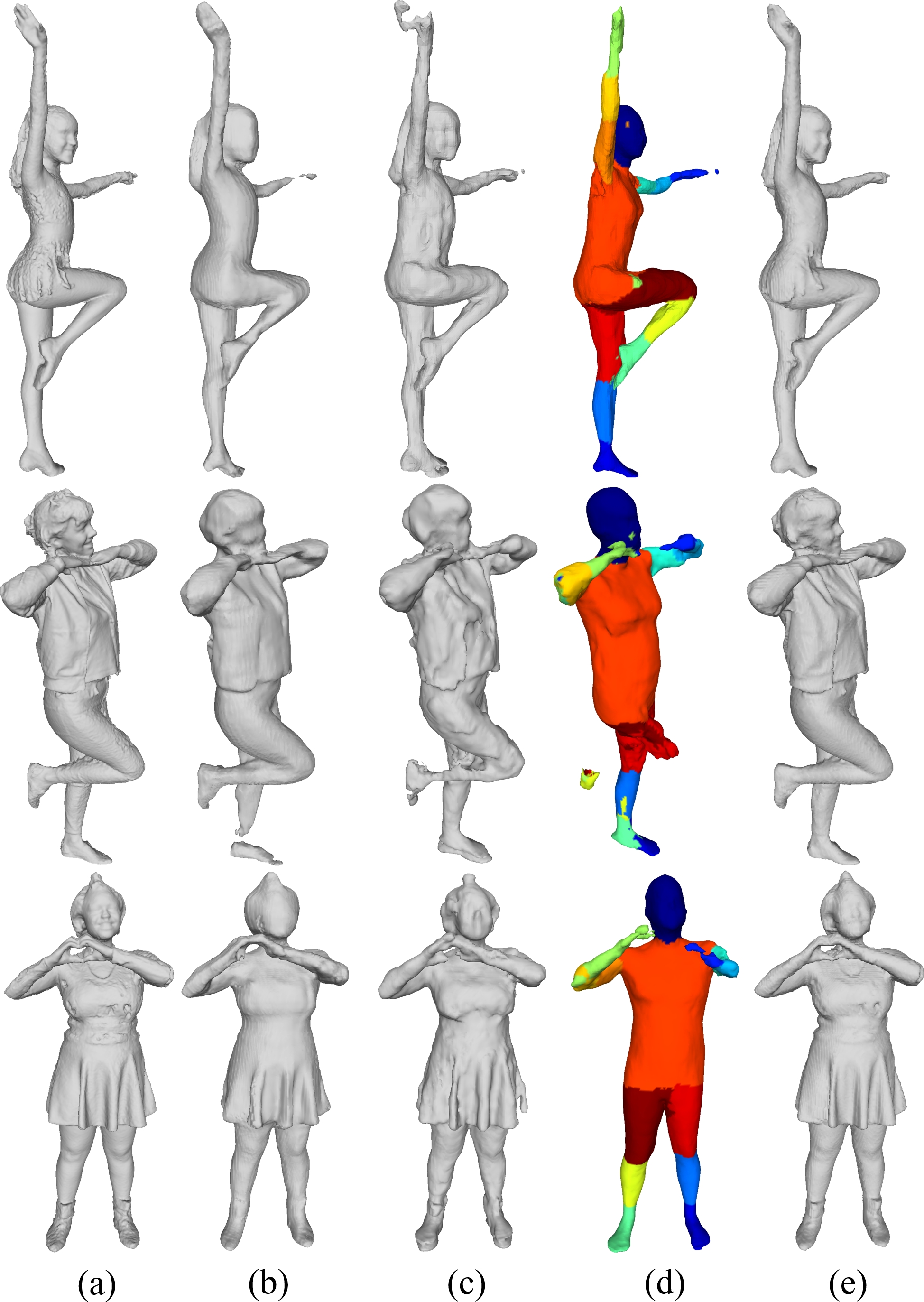

Fig 4. Qualitative comparison with Multi-view PIFu and IPNet on synthesized evaluation dataset. From (a) to (e) are the ground truth model, the results of Multi-view PIFu, the outter layer and inner reconstruction results of IPNet, and our results, respectively.

Fig 5. Qualitative comparison with Motion2Fusion and Multi-view PIFu (trained using our dataset) using real captured multi-view RGBD data. For each subfigure, from left to right are the results of ours, Motion2Fusion and Multi-view PIFu, respectively. Given very sparse and low frame rate depth inputs from consumer RGBD sensors, Motion2Fusion generates noisy results under severe topological changing regions and fast motions due to the deteriorated non-rigid tracking performance. Moreover, the lack of depth information in Multi-view PIFu leads to over-smoothed results.

Technical Paper

Demo Video

THuman2.0 Dataset

Fig.7 We released the captured training dataset which contains 500 high-quality 3D human scans with various poses and cloth styles. Please click here for downloading.

Supplementary Material

Citation

Tao Yu, Zerong Zheng, kaiwen Guo, Pengpeng Liu, Qionghai Dai, Yebin Liu. "Function4D: Real-time Human Volumetric Capture from Very Sparse Consumer RGBD Sensors". CVPR 2021

@InProceedings{tao2021function4d,

title={Function4D: Real-time Human Volumetric Capture from Very Sparse Consumer RGBD Sensors},

author={Yu, Tao and Zheng, Zerong and Guo, Kaiwen and Liu, Pengpeng and Dai, Qionghai and Liu, Yebin},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR2021)},

month={June},

year={2021},

}