The 41st International Conference and Exhibition on Computer Graphics and Interactive Techniques (SIGGRAPH 2014)

Intrinsic Video and Applications

Genzhi Ye , Elena Garces, Yebin Liu, Qionghai Dai, Diego Gutierrez

Deptartment of Automation, Tsinghua University, Graphics and Imaging Lab, Universidad de Zaragoza

Abstract

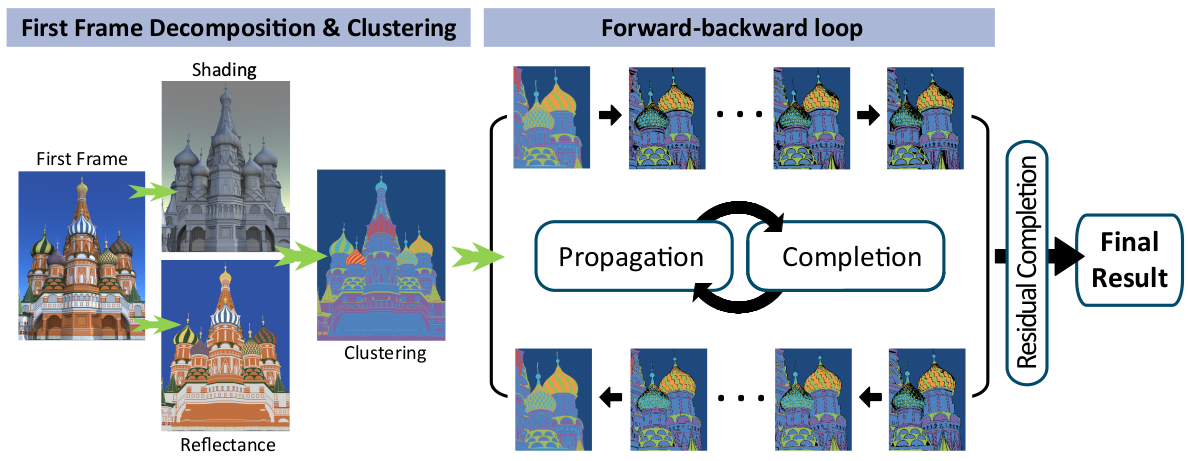

We present a method to decompose a video shot in its intrinsic components of reflectance and shading, plus a number of example applications in video editing such as segmentation, material editing, recolorization and color transfer. Intrinsic decomposition is an ill-posed problem, which becomes even more challenging in the case of video due to the need for temporal coherence and the potentially large memory requirements of a global approach. Additionally, user interaction should be kept to a minimum in order to ensure efficiency. We propose a probabilistic approach, formulating a Bayesian Maximum a Posteriori problem to drive the propagation of clustered reflectance values from the first frame, and defining additional constraints as priors on the reflectance and shading. We explicitly leverage temporal information in the video by building a causal-anticausal, coarse-to-fine iterative scheme, and by relying on optical flow information. We impose no restrictions on the input video, and show examples epresenting a varied range of difficult cases. Our method is the first one designed explicitly for video; moreover, it naturally ensures temporal consistency, and compares favorably against the state of the art in this regard.

[paper]

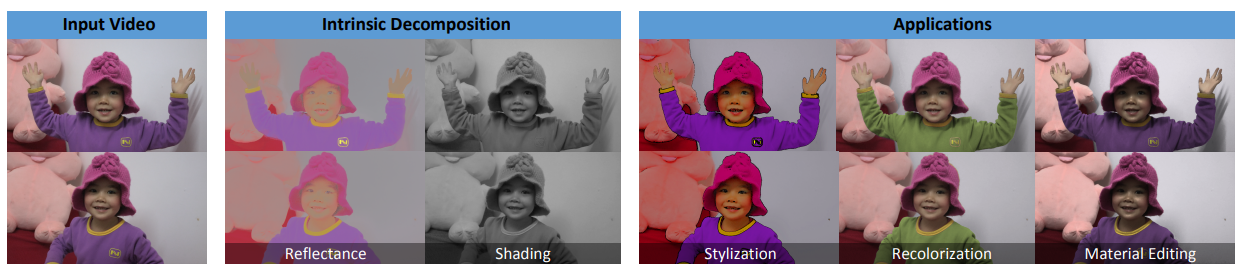

Fig 1. We propose a novel method for intrinsic video decomposition, which exhibits excellent temporal coherence. Additionally, we show a varied number of related applications such as video segmentation, recolorization, stylization and material editing (the last three included in the teaser image). Please refer to the accompanying video for these and all the other results shown in the paper.

Fig 2. Overview of our algorithm. We first decompose the ini- tial frame into its intrinsic components. To reduce inconsistencies, we first cluster the initial reflectance values (Section 4). A forward- backward loop propagates this clustered reflectance in the temporal domain, leaving out unreliable pixels (Section 5). After this prop- agation step, some pixels may still remain unassigned; we rely on Retinex theory to apply shading constraints, thus obtaining the final result (Section 6).

Demo Video

Citation

Ye, Genzhi, Elena Garces, Yebin Liu, Qionghai Dai, and Diego Gutierrez. "Intrinsic video and applications." ACM Transactions on Graphics (ToG) 33, no.4 (2014):1-11.

@article{ye2014intrinsic,

title={Intrinsic video and applications},

author={Ye, Genzhi and Garces, Elena and Liu, Yebin and Dai, Qionghai and Gutierrez, Diego},

journal={ACM Transactions on Graphics (ToG)},

volume={33},

number={4},

pages={1--11},

year={2014},

publisher={ACM New York, NY, USA}

}