CVPR 2021 (Oral)

POSEFusion: Pose-guided Selective Fusion for Single-view Human Volumetric Capture

Zhe Li1, Tao Yu1, Zerong Zheng1, Kaiwen Guo2, Yebin Liu1

1Tsinghua University, 2Google

Abstract

We propose POse-guided SElective Fusion (POSEFusion), a single-view human volumetric capture method that leverages tracking-based methods and tracking-free inference to achieve high-fidelity and dynamic 3D reconstruction. By contributing a novel reconstruction framework which contains pose-guided keyframe selection and robust implicit surface fusion, our method fully utilizes the advantages of both tracking-based methods and tracking-free inference methods, and finally enables the high-fidelity reconstruction of dynamic surface details even in the invisible regions. We formulate the keyframe selection as a dynamic programming problem to guarantee the temporal continuity of the reconstructed sequence. Moreover, the novel robust implicit surface fusion involves an adaptive blending weight to preserve high-fidelity surface details and an automatic collision handling method to deal with the potential self-collisions. Overall, our method enables high-fidelity and dynamic capture in both visible and invisible regions from a single RGBD camera, and the results and experiments show that our method outperforms state-of-the-art methods.

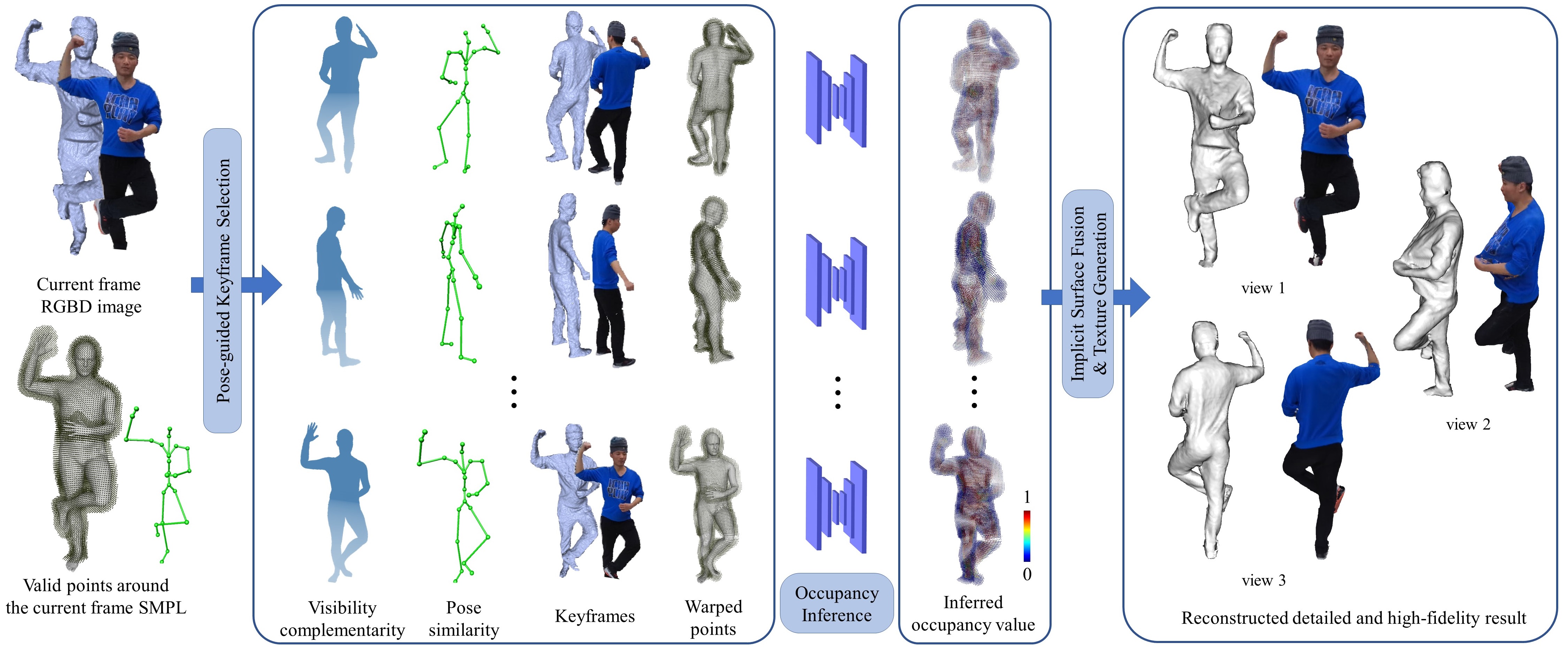

Fig 1. Reconstruction pipeline. Firstly we perform the pose-guided keyframe selection scheme to select appropriate keyframes by the visibility complementarity and pose similarity. Valid points around the current SMPL are then deformed to each keyframe by SMPL motion, and fed into a neural network with the corresponding RGBD image. The neural network infers occupancy values of each keyframe, and then we integrate all the inferred values to generate a complete model with high-fidelity and dynamic details. Finally, a high-resolution texture map is generated by projecting the reconstructed model to each keyframe RGB image and inpainted by a neural network.

Results

Fig 2. Results with dynamic and high-fidelity details reconstructed by our method. The bottom row is the input view, and the top row is another rendering view. Dynamic and high-fidelity details are reconstructed in both visible and invisible regions.

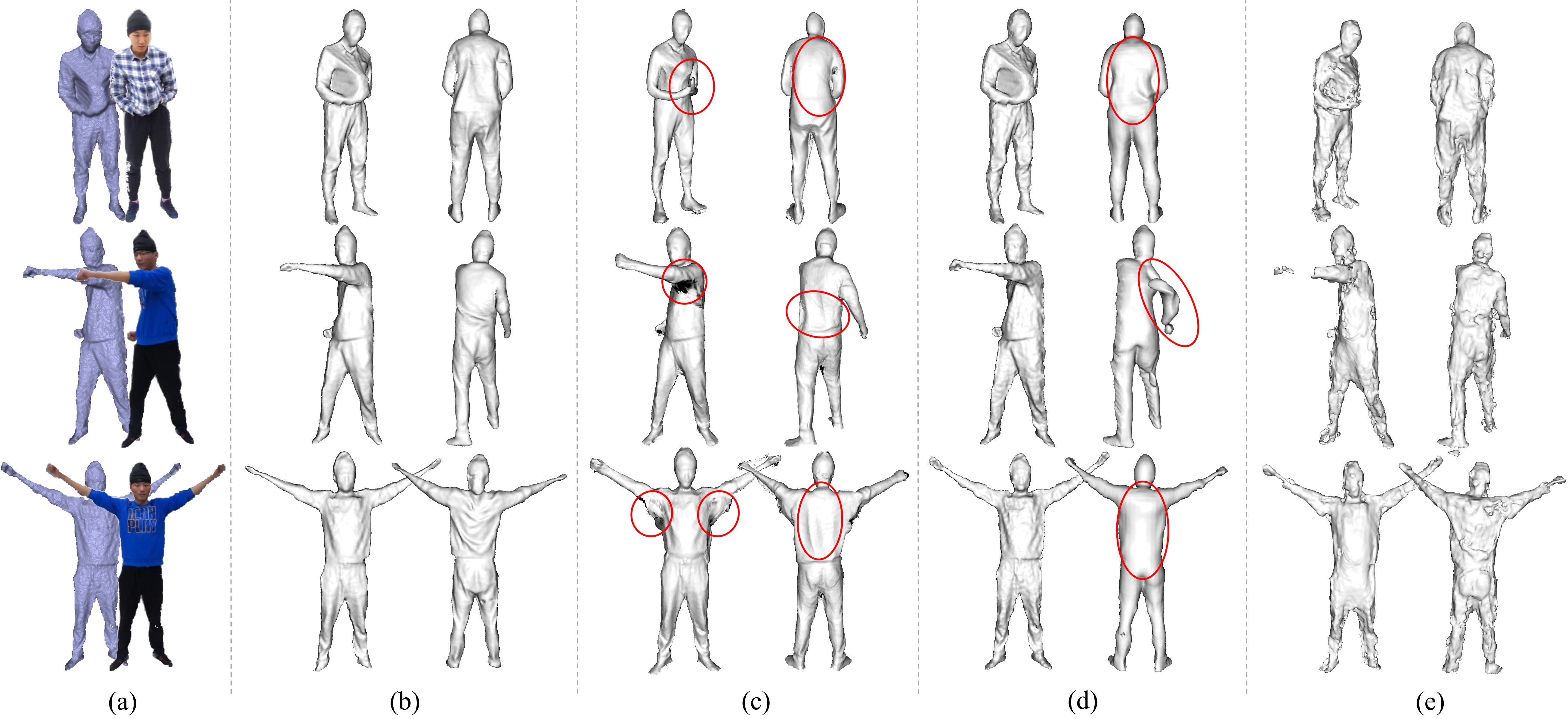

Fig 3. Qualitative comparison against other state-of-the-art methods. (a) RGBD images in the current frame, and results by our method (b), DoubleFusion (c), RGBD-PIFu (d) and IP-Net (e).

Technical Paper

Demo Video

Citation

Zhe Li, Tao Yu, Zerong Zheng, kaiwen Guo, Yebin Liu. "POSEFusion: Pose-guided Selective Fusion for Single-view Human Volumetric Capture". CVPR 2021

@InProceedings{li2021posefusion,

title={POSEFusion: Pose-guided Selective Fusion for Single-view Human Volumetric Capture},

author={Li, Zhe and Yu, Tao and Zheng, Zerong and Guo, Kaiwen and Liu, Yebin},

year={2021},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition},

month={June},

year={2021},

}