ECCV 2022 Oral

DiffuStereo: High Quality Human Reconstruction via Diffusion-based Stereo Using Sparse Cameras

Ruizhi Shao, Zerong Zheng, Hongwen Zhang, Jingxiang Sun, Yebin Liu

Tsinghua University

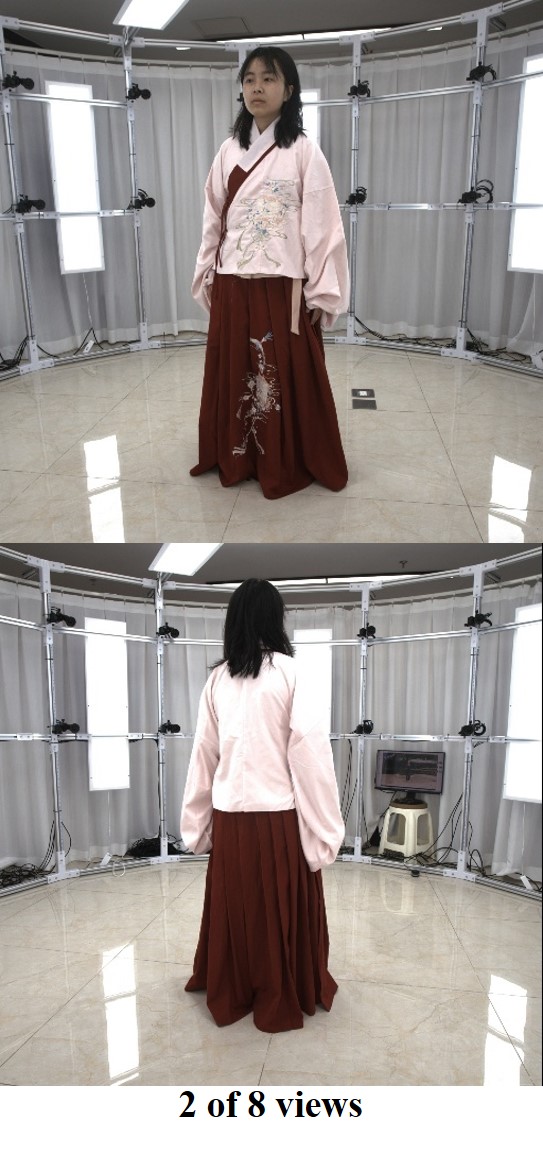

Fig 1. Our DiffuStereo system can reconstruct high accurate 3D human models with sharp geometric details using only 8 RGB cameras.

Abstract

We propose DiffuStereo, a novel system using only sparse cameras (8 in this work) for high-quality 3D human reconstruction. At its core is a novel diffusion-based stereo module, which introduces diffusion models, a type of powerful generative models, into the iterative stereo matching network. To this end, we design a new diffusion kernel and additional stereo constraints to facilitate stereo matching and depth estimation in the network. We further present a multi-level stereo network architecture to handle high-resolution (up to 4k) inputs without requiring unaffordable memory footprint. Given a set of sparse-view color images of a human, the proposed multi-level diffusion-based stereo network can produce highly accurate depth maps, which are then converted into a high-quality 3D human model through an efficient multi-view fusion strategy. Overall, our method enables automatic reconstruction of human models with quality on par to high-end dense-view camera rigs, and this is achieved using a much more light-weight hardware setup. Experiments show that our method outperforms state-of-the-art methods by a large margin both qualitatively and quantitatively.

[ArXiv] [Paper] [Supp] [Code] [Data]

Overview

Fig 2. Overview of the DiffuStereo system. Our system consists of three key steps to reconstruct high-quality human models from sparse-view inputs: i) An initial human mesh is predicted by DoubleField, and rendered as the coarse disparity flow; ii) The coarse disparity maps are refined in the diffusion-based stereo to obtain the high-quality depth maps; iii) The initial human mesh and high-quality depth maps are fused as the final high-quality human mesh.

Fig 3. Illustration of the forward process and the reverse process in our diffusion-based stereo.

Results (THuman2.0)

Results (Real-World Dataset)

Demo Video

Citation

Ruizhi Shao, Zerong Zheng, Hongwen Zhang, Jingxiang Sun, Yebin Liu. "DiffuStereo: High Quality Human Reconstruction via Diffusion-based Stereo Using Sparse Cameras". ECCV 2022

@inproceedings{shao2022diffustereo,

author = {Shao, Ruizhi and Zheng, Zerong and Zhang, Hongwen and Sun, Jingxiang and Liu, Yebin},

title = {DiffuStereo: High Quality Human Reconstruction via Diffusion-based Stereo Using Sparse Cameras},

booktitle = {ECCV},

year = {2022}

}